All Categories

Featured

Table of Contents

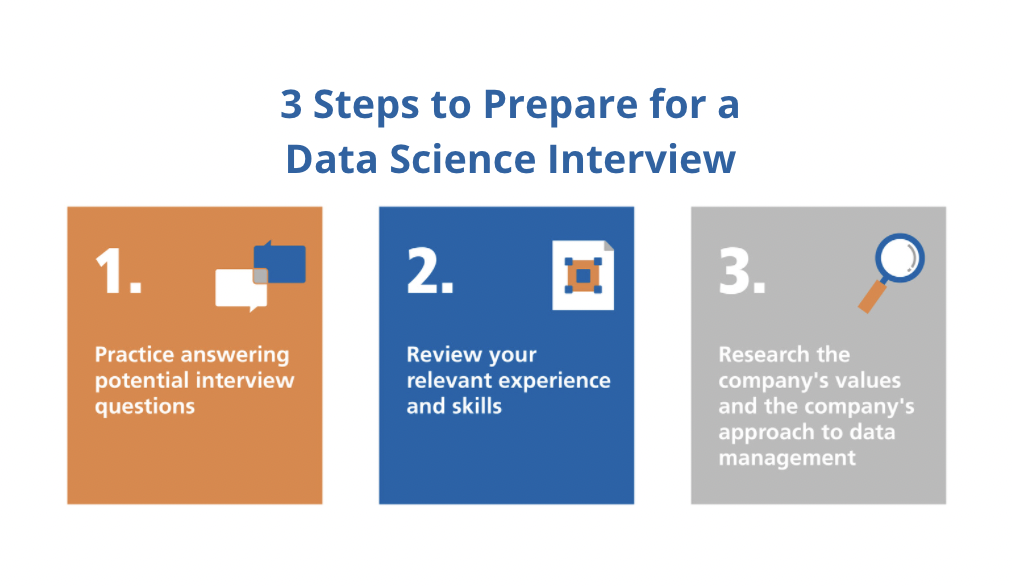

Amazon now typically asks interviewees to code in an online record file. Yet this can differ; maybe on a physical white boards or a digital one (Visualizing Data for Interview Success). Check with your recruiter what it will certainly be and practice it a great deal. Now that you know what questions to anticipate, allow's concentrate on just how to prepare.

Below is our four-step prep prepare for Amazon information scientist prospects. If you're planning for more business than just Amazon, after that examine our general information science interview preparation overview. Many prospects fall short to do this. Prior to investing 10s of hours preparing for an interview at Amazon, you should take some time to make certain it's actually the right business for you.

Exercise the technique utilizing instance questions such as those in section 2.1, or those about coding-heavy Amazon positions (e.g. Amazon software program advancement engineer meeting overview). Method SQL and programs questions with medium and tough level instances on LeetCode, HackerRank, or StrataScratch. Take a look at Amazon's technical subjects page, which, although it's created around software growth, need to provide you a concept of what they're looking out for.

To succeed in FAANG interviews, candidates need advanced preparation and targeted strategies. Programs like Best FAANG Interview Prep Courses equip participants with critical skills for roles at other FAANG companies. From mock interviews to technical preparation, these resources help candidates excel in competitive interviews

Keep in mind that in the onsite rounds you'll likely have to code on a whiteboard without being able to execute it, so practice composing via issues on paper. For artificial intelligence and stats concerns, supplies online programs made around analytical likelihood and various other beneficial subjects, a few of which are cost-free. Kaggle likewise provides totally free training courses around initial and intermediate device learning, as well as information cleaning, data visualization, SQL, and others.

FAANG roles in AI and ML are among the most sought-after careers in tech, making targeted preparation essential - Advanced Machine Learning Bootcamps for Engineers. Programs like Comprehensive Technical Interview Prep Resources focus on role-specific challenges to help participants master critical concepts. From neural network applications to real-world problem-solving scenarios, these courses provide actionable strategies to achieve their career aspirations. With additional support from peer collaboration networks, learners gain a comprehensive understanding of industry expectations

Google Interview Preparation

Lastly, you can post your own questions and talk about subjects likely ahead up in your meeting on Reddit's data and maker learning threads. For behavior meeting questions, we suggest learning our detailed method for answering behavioral questions. You can then make use of that approach to exercise addressing the instance questions offered in Area 3.3 over. Make sure you contend least one story or instance for each of the concepts, from a large range of settings and projects. A great method to exercise all of these various types of questions is to interview yourself out loud. This may seem weird, yet it will significantly enhance the means you communicate your answers during a meeting.

Thriving in tech careers requires expertise in both foundational knowledge and real-world applications. Resources like Advanced AI Career Insights equip learners with career-ready skills. Participants learn deep learning model optimization, ensuring they excel in competitive hiring processes

One of the main challenges of information researcher meetings at Amazon is connecting your various responses in a method that's simple to understand. As a result, we strongly advise exercising with a peer interviewing you.

Nonetheless, be warned, as you might meet the adhering to issues It's tough to understand if the responses you obtain is exact. They're not likely to have insider expertise of meetings at your target business. On peer systems, individuals commonly lose your time by not showing up. For these reasons, numerous prospects skip peer simulated meetings and go directly to mock meetings with a professional.

Common Data Science Challenges In Interviews

That's an ROI of 100x!.

Information Scientific research is fairly a large and diverse field. Consequently, it is truly tough to be a jack of all trades. Generally, Information Science would certainly concentrate on maths, computer technology and domain name proficiency. While I will quickly cover some computer scientific research fundamentals, the bulk of this blog will mostly cover the mathematical essentials one could either require to comb up on (and even take an entire program).

While I understand a lot of you reading this are extra math heavy by nature, realize the bulk of information science (dare I state 80%+) is collecting, cleansing and processing data into a helpful kind. Python and R are one of the most popular ones in the Data Science space. I have likewise come across C/C++, Java and Scala.

Tech Interview Preparation Plan

It is usual to see the majority of the information researchers being in one of 2 camps: Mathematicians and Database Architects. If you are the second one, the blog will not aid you much (YOU ARE ALREADY AWESOME!).

This could either be accumulating sensing unit data, analyzing web sites or executing surveys. After collecting the data, it needs to be changed into a usable form (e.g. key-value shop in JSON Lines data). Once the data is gathered and put in a useful layout, it is vital to execute some information quality checks.

Behavioral Interview Prep For Data Scientists

In cases of fraudulence, it is very common to have heavy course discrepancy (e.g. just 2% of the dataset is actual fraud). Such details is necessary to pick the suitable selections for feature design, modelling and version analysis. For more details, examine my blog on Fraud Detection Under Extreme Course Imbalance.

Common univariate evaluation of choice is the histogram. In bivariate analysis, each feature is contrasted to other functions in the dataset. This would certainly consist of relationship matrix, co-variance matrix or my personal favorite, the scatter matrix. Scatter matrices enable us to discover surprise patterns such as- functions that should be engineered with each other- functions that might require to be gotten rid of to avoid multicolinearityMulticollinearity is really an issue for multiple versions like direct regression and hence requires to be cared for accordingly.

Visualize utilizing web use information. You will certainly have YouTube customers going as high as Giga Bytes while Facebook Carrier customers make use of a pair of Huge Bytes.

Another concern is the usage of categorical values. While specific worths are usual in the data scientific research world, realize computers can only comprehend numbers.

Statistics For Data Science

At times, having too several sporadic dimensions will hinder the performance of the model. An algorithm typically made use of for dimensionality decrease is Principal Elements Analysis or PCA.

The common classifications and their sub categories are described in this section. Filter methods are typically used as a preprocessing action.

Typical methods under this group are Pearson's Correlation, Linear Discriminant Evaluation, ANOVA and Chi-Square. In wrapper techniques, we attempt to make use of a part of attributes and educate a model using them. Based on the inferences that we draw from the previous model, we determine to add or remove features from your part.

Engineering Manager Behavioral Interview Questions

Usual techniques under this category are Forward Choice, Backward Elimination and Recursive Function Removal. LASSO and RIDGE are common ones. The regularizations are given in the equations listed below as referral: Lasso: Ridge: That being stated, it is to understand the technicians behind LASSO and RIDGE for interviews.

Overseen Discovering is when the tags are available. Without supervision Understanding is when the tags are unavailable. Obtain it? Oversee the tags! Pun meant. That being said,!!! This mistake is enough for the interviewer to cancel the interview. Another noob mistake individuals make is not normalizing the features prior to running the version.

For this reason. General rule. Straight and Logistic Regression are one of the most basic and typically made use of Equipment Learning formulas around. Prior to doing any analysis One usual interview slip individuals make is beginning their analysis with an extra complex model like Neural Network. No question, Neural Network is very precise. However, criteria are very important.

Latest Posts

How To Prepare For Data Engineer System Design Interviews

The Best Free Coding Interview Prep Courses In 2025

Best Free Udemy Courses For Software Engineering Interviews